Social network

Fakebook

“If the message is right, we don’t really care where it comes from or how it was created. That’s why it’s not such a big deal if it turns out to be fake.” This statement was made by Christian Lüth, the spokesperson for the AfD, Germany’s right-wing nationalist party. It referred to a composite image of a member of the Christian-Democrats’ youth organisation Young Union. The picture was tweeted in 2017 and supposedly showed an antifascist activist about to throw a rock. The quote showed that we are living in a post-truth era in which stoking fears of left-wing rioters, refugees and critical media is more important than factual accuracy.

Fake news is not a new phenomenon. Consider the forged Hitler diaries that “Stern” magazine published in 1983 or Iraq’s alleged possession of weapons of mass destruction which served to justify the US invasion in 2003. What is new is that, thanks to social media, anyone and everyone can spread disinformation, while traditional media are increasingly losing their traditional gate-keeping and mediating roles.

Another problem is what is known as social bots: easily-created computer programmes that perform certain tasks automatically. For example, they pose as real people, use fake accounts on social networks and overwhelm the network with fake reports and smear campaigns. Social bots exchange messages with each other, creating inauthentic trends that marginalise real conversations.

Any discussion of fake news almost automatically becomes one about Facebook. This social network has almost 2.2 billion active users, involving significantly more than a quarter of the world population. Many young people, including in developing countries, get their information almost exclusively from Facebook.

Facebook loves extremes. And it loves news that stirs up emotions and has been shared by a large number of people. Many users find fake news appealing because it seems much more exciting than news from the established media.

Impacts of disinformation

The referendum on whether the UK should leave the EU shows how big the influence of fake news is. The British voted for Brexit after supporters spread the lie that the National Health Service would get an additional £ 350 million a week if the country left the EU. During the US presidential campaign, the candidate Hillary Clinton was vilified in several ways – alternatively as the leader of child pornography rings or as an Al-Qaeda and ISIS sympathiser. In the end, the winner was Donald Trump, someone who probably knows more about fake news than politics.

The ties between the American president and Russia are currently under investigation. Troll factories, which use bots to spread false information online on behalf of the Russian government, are suspected of having had a relevant important impact on the US election.

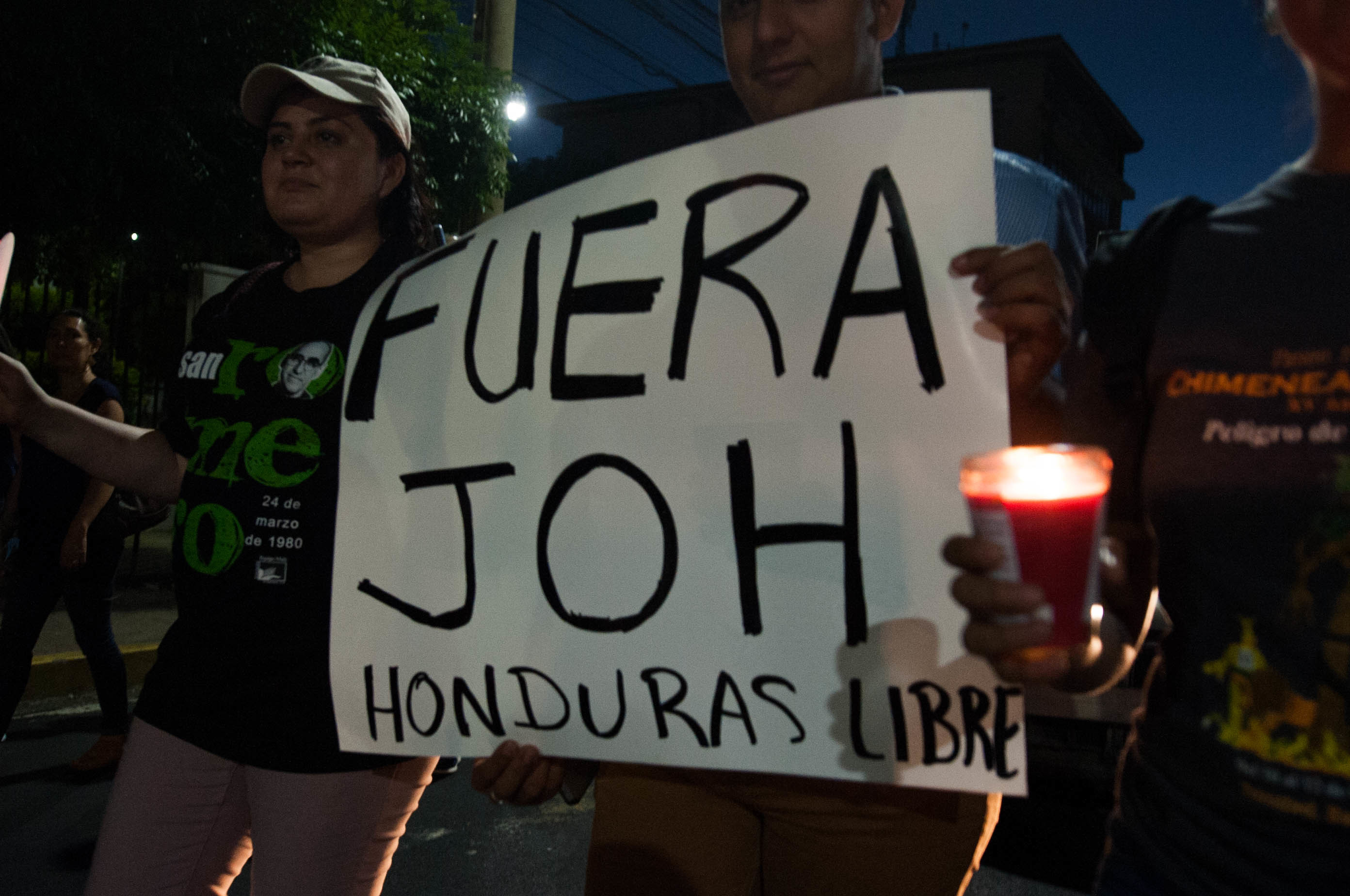

During Kenya’s presidential election campaigns in 2017, fake videos circulated on social media. They supposedly showed CNN and BBC reports, using fake numbers to show that the incumbent, Uhuru Kenyatta, had a significant lead over his opponent, Raila Odinga. The fact that Kenyatta’s victory was met with only limited protest that did not escalate into the kind of violence that occurred after the 2007 election may be linked to voters’ exposure to such fake news. Portland, a consulting firm, did a survey of 2,000 Kenyans, and 90 % of the respondents reported seeing fake news before the election.

On social media, fake news is not only politically motivated, but also a profitable business. Only in August 2017 did Facebook come up with the idea to block ads from pages that are known to repeatedly spread disinformation. Facebook argues that this measure will make it unprofitable to continue to do so. The ban is lifted, however, if a page stops the behaviour.

Private accounts pose a larger problem. Facebook refuses to give researchers access to anonymised data about the production and spread of fake news via private accounts. The multinational corporation justifies this stance by emphasising users’ privacy rights. At the same time, the protection of privacy never seemed to matter much when Facebook cooperated financially with data brokers. That is what the most recent data scandal shows.

The data scandal

In March, a whistle-blower leaked that the British data analysis firm Cambridge Analytica (CA) had collected data illegally. Facebook reportedly knew of the data theft in 2015, but did nothing about it. The company only requested that the data be deleted without ever checking whether CA actually complied with that request. CA used an app designed by a third-party. This app was downloaded by 270,000 Facebook users. What they thought was a harmless personality test that would serve research, was actually an instrument to target political advertising in a way that matched users’ psychological profiles.

By participating, the 270,000 users did not only disclose their own data, but that of their Facebook friends as well. CA was thus able to collect detailed information concerning up to 87 million people.

An undercover investigation by the British television station Channel 4 has revealed that CA manipulated the voting behaviour of social media users with fake news and staged sex scandals. The company, which Trump’s former chief strategist, Stephen Bannon, helped to set up, has boasted on the record that it or its parent company SCL influenced not only the US election, but also the Brexit referendum, the elections in Kenya in 2013 and 2017 and many other elections on every continent. Some of the claims may be exaggerated for marketing purposes. The truth is that Cambridge Analytica also worked for Ted Cruz, a Republican senator who wanted to run for president, but was beaten by Trump in the primaries.

In one way or another, the CA scandal has permanently damaged Facebook’s reputation. Several companies have announced their intention to leave the social network. Mark Zuckerberg, Facebook’s founder and chief executive, had to testify before the US Congress in April. There are demands to break up his tech company because it dominates the market as a quasi monopolist.

Especially after the 2016 US election, Facebook was heavily criticised for not addressing the spread of disinformation on its network. Trump supporters relied more unabashedly on false reports than their opponents. It helped them that Facebook’s algorithm prioritises frequently shared content and pushes it into users’ newsfeeds. Whether the content is true or not, makes no difference. A false report about the pope endorsing Trump was shared over 960,000 times before election day; a correction of this piece of disinformation was shared only 34,000 times. Nevertheless, Zuckerberg said that the accusation that Facebook had influenced the election results sounds “pretty crazy”.

In response to intense public pressure, however, Facebook came up with a half-baked approach to slow down the spread of fake news. In collaboration with external news sites, the corporation introduced a new way for its US users to identify questionable content in the spring of 2017. An article that at least two fact-checking sites considered objectionable, would be flagged as “disputed” and marked with a red warning triangle. It had to be at least two fact-checkers because Facebook wanted to set the bar as high as possible. The strategy passed responsibility on to the network’s users. For the fact checkers to become involved, they had to report news that was potentially forged.

Ultimately Facebook acknowledged that this strategy did not work. The fact checking often took several days, and in that time the disputed fake item was shared thousands of times. Moreover, content that wasn’t flagged suddenly looked more reliable. By Facebook’s own admission, the “disputed” flag was usually ineffective – and in the worst-case scenario, it had the opposite effect. Demagogues like Trump turned the tables and accused their political opponents of lying. No one on Twitter screams “fake news” as often as the American President – while at the same time spreading plenty of lies of his own.

In late 2017, Facebook dropped the “disputed” flag. Instead, it has started displaying related content that puts the false report in context. According to Facebook, this method, which is less stigmatising, only requires one fact-checker, so the response is faster. The new strategy has not reduced the click rate of fake news, though the company claims that it is not shared as often. However, it is impossible to know for sure because Facebook guards this kind of data jealously. External fact-checkers have complained that the corporation is not cooperative, which makes their work more difficult.

Patrick Schlereth edits the website of Frankfurter Rundschau, a daily newspaper.

http://www.fr.de